Esxcli Software Vib Install Nexus 1000v

I am having some problems with VSM/VEM connectivity after an upgrade that I'm hoping someone can help with. I have a 2 ESXi host cluster that I am upgrading from vSphere 5.0 to 5.5u1, and upgrading a Nexus 1000V from SV2(2.1) to SV2(2.2). I upgraded vCenter without issue (I'm using the vCSA), but when I attempted to upgrade ESXi-1 to 5.5u1 using VUM it complained that a VIB was incompatible. After tracing this VIB to the 1000V VEM, I created an ESXi 5.5u1 installer package containing the SV2(2.2) VEM VIB for ESXi 5.5 and attempted to use VUM again but was still unsuccessful I removed the VEM VIB from the vDS and the host and was able to upgrade the host to 5.5u1. I tried to add it back to the vDS and was given the error below: vDS operation failed on host esxi1, Received SOAP response fault from : invokeHostTransactionCall Received SOAP response fault from : invokeHostTransactionCall An error occurred during host configuration. Got (vim.fault.PlatformConfigFault) exception I installed the VEM VIB manually at the CLI with 'esxcli software vib install -d /tmp/cisco-vem-v164-4.2.1.2.2.2.0-3.2.1.zip' and I'm able to add to to the vDS, but when I connect the uplinks and migrate the L3 Control VMKernel, I get the following error where it complains about the SPROM when the module comes online, then it eventually drops the VEM. 2014 Mar 29 15:34:54 n1kv%VEMMGR-2-VEMMGRDETECTED: Host esxi1 detected as module 3 2014 Mar 29 15:34:54 n1kv%VDCMGR-2-VDCCRITICAL: vdcmgr has hit a critical error: SPROM data is invalid.

Upgrading Cisco Nexus 1000V before upgrading to. To install the Nexus 1000V VEM module VIB using. Using esxcli and async driver VIB file section of. Jun 30, 2015 Install The Cisco Nexus 1000v on. # esxcli software vib install -v /var/log/vmware/cross_cisco-vem-v140-4.2.1.1. # esxcli software vib list. Cisco Nexus 1000V VSM VEM Removal. Enter ‘ esxcli software vib remove ’ command with correct version. Your previous installation of Nexus 1000V has.

Please reprogram your SPROM! Hi, The SETPORTSTATEFAIL message is usually thrown when there is a communication issue between the VSM and the VEM while the port-channel interface is being programmed. What is the uplink port profile configuration? Other hosts are using this uplink port profile successfully? The upstream configuration on an affected and a working host is the same? (ie control VLAN allowed where necessary) Per kpate's post, control VLAN needs to be a system VLAN on the uplink port profile. The VDC SPROM message is a cosmetic defect HTH, Joe.

I determined the issue a few weeks ago, the culprit was Jumbo Frames which I had correctely turned on end to end but I learned my NIC's didn't support it. I went back to a host that was still on a previous version of the VEM and tried MTU 9000 pings succesfully, but when I added a -d to the command it failed, indicating I never really had Jumbo Frames working. The new version of the VEM must be more intolerant of this misconfig than the old version. I have since turned off Jumbo Frames everywhere and am successfully keeping the VEM's connected to the VSM's. Thanks for the KB on the SPROM cosmetic issue, I had originally thought that was the problem but now I know it's not.

Installing the Cisco Nexus 1000V distributed virtual switch is not that difficult, once you have learned some new concepts. Before I jump straight into installing the Nexus 1000V, lets run through the vSphere networking options and some of the reasons you’d want to implement the Nexus 1000V.

VSS (vSphere Standard Switch) Often referred to as vSwitch0, the standard vSwitch is the default virtual switch vSphere offers you, and provides essential networking features for the virtualisation of your environment. Some of these features include 802.1Q VLAN tagging, egress traffic shaping, basic security, and NIC teaming. However, the vSS or standard vSwitch, is an individual virtual switch for each ESX/ESXi host and needs to be configured as individual switches. Most large environments rule this out as they need to maintain a consistent configuration across all of their ESX/ESXi hosts.

Of course, VMware Host Profiles go some way to achieving this but it’s still lacking in what features in distributed switches. VDS (vSphere Distributed Switch) So the vDS, also known as DVS (Distributed Virtual Switch) provides a single virtual switch that spans all of your hosts in the cluster, which makes configuration of multiple hosts in the virtual datacenter far easier to manage. Some of the features available with the vDS includes 802.1q VLAN tagging as before, but also ingress/egress traffic shaping, PVLANs (Private VLANs), and network vMotion. The key with using a distributed virtual switch is that you only have to manage a single switch. Cisco Nexus 1000V In terms of features and manageability, the Nexus 1000V is over and above the vDS as it’s going to be so familiar to those with existing Cisco skills, in addition to a heap of features that the vDS can’t offer.

For example, QoS tagging, LACP, and ACLs (Access Control Lists). Recently I have come across two Cisco UCS implementations which require the Nexus 1000V to support PVLANs in their particular configuration (due to the Fabric Interconnects using End-Host Mode). There are many reasons one would choose to implement the Cisco Nexus 1000V, lets call it N1KV for short Without further delay, grab a coffee and we’ll get the N1KV installed!

Esxcli Software Vib Install Local Data Store

Components of the Cisco Nexus 1000V on VMware vSphere There are two main components of the Cisco Nexus 1000V distributed virtual switch; the VSM (Virtual Supervisor Module) and the VEM (Virtual Ethernet Module). If you are familiar with Cisco products and have worked with physical Cisco switches, then you will already know what the supervisor module and ethernet modules are. In essence, a distributed virtual switch, whether we are talking about the vSphere (vDS) or N1KV have a common architecture. That is the control and data plane, which is what makes it ‘distributed’ in the first place. By separating the control plane (VSM), and the data plane (VEM), a distributed switch architecture is possible as illustrated in the diagram here (left). Another similarity that is the use of port groups. You should be familiar with port groups as they are present on both the VMware vSS and vDS.

In Cisco terms, we’re talking about ‘port profiles’, and they are configured with the relevant VLANs, QoS, ACLs, etc. Port profiles are presented to vSphere as a port group.

Installing the Cisco Nexus 1000V What you need:. Unless you already have a licensed copy of the Cisco Nexus 1000V, then you can download the evaluation here:. Note: you will need to register for a Cisco account in order to download the evaluation. vSphere environment with vCenter. Note: I’m using my vSphere 5 lab for this exercise but vSphere 4.1 will do fine. At least one ESX/ESXi host, preferably two or more!.

If you are using a lab environment and don’t have the physical hardware available then create a virtual ESXi server (this details how to do this). You’ll also need to create the following VLANs:.

Control. Management. Packet Note: If you are doing this in a lab environment then you can place all of the VLANs into a single VM network, but in production make sure you have separate VLANs for these. In the latest release of the Nexus 1000V the Java based installer, which we will come on to in a moment, now deploys the VSM (or two VSMs in HA mode) to vCenter and a GUI install wizard guides you through the steps. This has made deployment of the N1KV even easier than before.

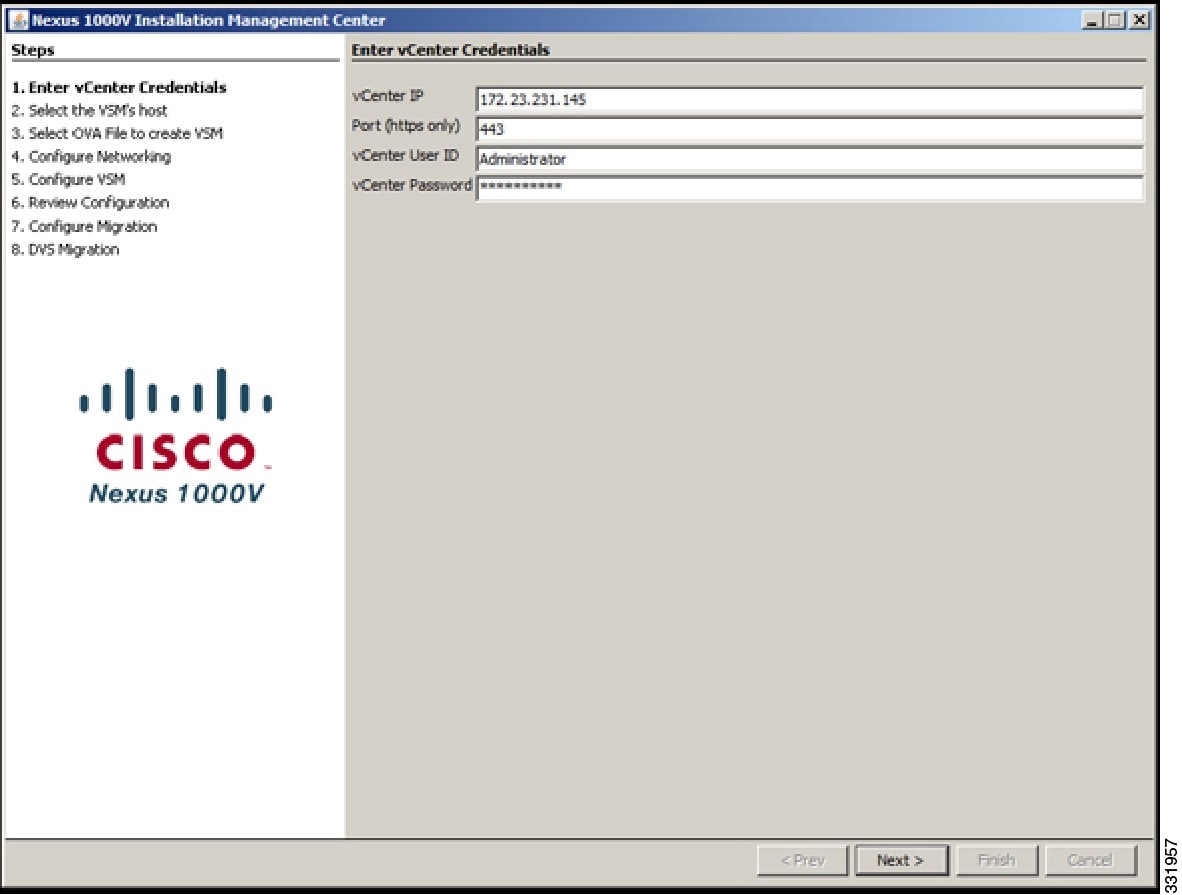

Once you have downloaded the Nexus 1000V from the Cisco website, continue on to the installation steps. Installation Steps: 1. Extract the.zip file you downloaded from Cisco, and navigate to VSM InstallerApp Nexus1000V-install.jar. Open this (you need Java installed) and it will launch the installation wizard. Enter the vCenter IP address, along with a username and password. Select the vSphere host where the VSM resides and click Next. Select the OVA (in the VSM Install directory), system redundancy option, virtual machine name and datastore, then click Next.

Note: This step is new, previously you had to deploy the OVA first, then run this wizard. If you choose HA as the redundancy option, it will append -1 or -2 to the virtual machine name. Now configure the networking by selecting your Control, Management and Packet VLANs. Note: In my home lab, I just created three port groups to illustrate this. Obviously in production you would typically have these VLANs defined, otherwise you can create new ones here on the Nexus 1000V. Configure the VSM by entering the switch name, admin password and IP address settings. Note: The domain ID is common between the VSMs in HA mode, but you will need a unique domain ID if running multiple N1KV switches.

For example, set the domain ID to 10. The native VLAN should be set to 1 unless otherwise specified by your network administrator. You can now review your configuration. If it’s all correct, click Next. The installer will now start deploying your VSM (or pair if using HA) with the configuration settings you entered during the wizard.

Once it has deployed you’ll get an option to migrate this host and networks to the N1KV. Choose No here as we’ll do this later. Finally you’ll get the installation summary, and you can close the wizard.

You’ll now see two Nexus 1000V VSM virtual machines in vCenter on your host. In a production environment you would typically have the VSMs on separate hosts for resilience. Within vCenter, if you navigate to Inventory Networking you should now see the Nexus 1000V switch: Installing the Cisco Nexus 1000V Virtual Ethernet Module (VEM) to ESXi 5 What we are actually doing here is installing the VEM on each of your ESX/ESXi hosts.

In the real world I prefer to use VMware Update Manager (VUM) to do this, as it will automatically add the VEM to a host when it is added to the N1KV virtual switch. However, for this tutorial I will show you how to add the VEM using the command line with ESXi 5. Open a web browser and open the Nexus 1000V web page,. You will then be presented with the Cisco Nexus 1000V extension (xml file) and the VEM software. It’s the VEM we are interested in here, so download the VIB that corresponds to your ESX/ESXi build.

Copy the VIB file on to your ESX/ESXi host. You must place this into /var/log/vmware as ESXi 5 expects the VIB to be present there. Note: Use the datastore browser in vCenter to do this. Log into the ESXi console either directly or using SSH (if it is enabled) and enter the following command: # esxcli software vib install -v /var/log/vmware/crosscisco-vem-v140-4.2.1.1.5.1.0-3.0.1.vib You should then see the following result: Installation Result Message: Operation finished successfully. Reboot Required: false VIBs Installed: Ciscobootbankcisco-vem-v140-esx4.2.1.1.5.1.0-3.0.1 VIBs Removed: VIBs Skipped: 4.

Tiga (3) agensi lain perlu terlibat dalam Mutiara PDCA adalah dengan satu tujuan untuk meluaskan skop PDCA agar semua sekolah kebangsaan mendapat manfaat dan memastikan kejayaan Pulau Pinang dalam peperiksaan utama UPSR, PMR dan SPM adalah meningkat setiap tahun. Kenapa perlu ada 3 agensi lain terlibat dalam Mutiara PDCA? Selain daripada PERDA, ada tiga agensi yang terlibat secara langsung dalam Mutiara PDCA iaitu Pusat Urus Zakat Pulau Pinang, Bahagian Penyelarasan Penyertaan Bumiputera (BPPB) Pulau Pinang dan Jabatan Pelajaran Pulau Pinang. Program didik cemerlang akademik spm.